One indicator of a trend in skateboarding is how much media coverage that trend receives (it goes both ways of course – trends influence what is covered, and what is covered influences trends). Thrasher magazine has an online archive of all of its covers (and some full issues too!) spanning from 1981 through 2012. These magazine covers provide a snapshot of what's interesting or popular in the skateboarding world at the time, so it seemed like a fun project to go through them and categorize the types of skateboarding represented. Over 300 covers later, I did just that. I also did something that some skate nerds may abhor - I counted stairs. A LOT of stairs! Skateboarders like stairs!!!

Okay, okay - results first and then I'll talk about the more boring technical details for those who are interested.

Results

Cover Trends

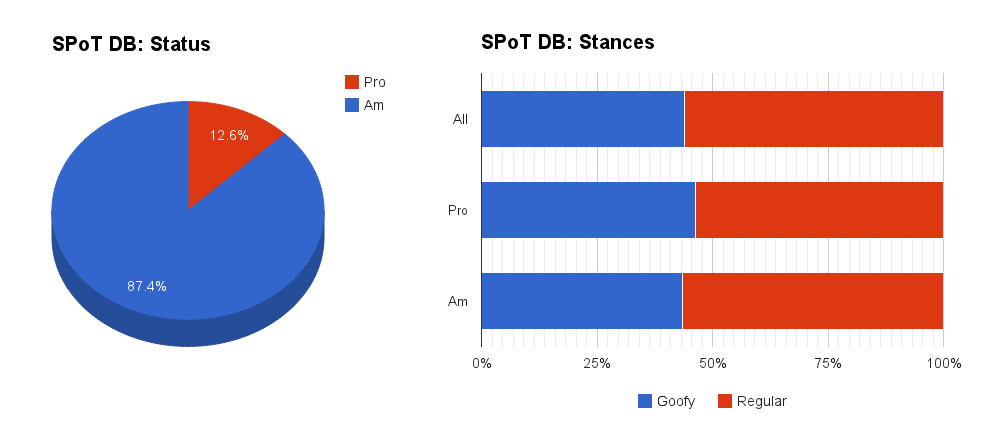

Figure 1 (direct link) shows the overall makeup of Thrasher covers. It's not much of a surprise that the majority are street tricks, since roughly 2/3 of the covers happened after the street skating boom of the early 90s. This shift away from parks and transition skating and onto the streets can be seen in Figure 2 (direct link). In this case I've included vert, swimming pools and park transition in the definition of transition. 1991 marks the real turning point, with both transition and street skating sharing about 42% of the covers. This turning point also corresponds to the general lull in skateboarding's popularity of the early 90s, and the so called "death of vert skating".

I do think there's a bit of a discrepancy between this trend and the "everyday skater" of today. After skateboarding became very popular in the early 00s (thanks THPS!), lots of skateparks popped up which resulted in a resurgence of park and transition skating. This, coupled with the fact that the increased number of skateboarders means that more street spots employ skating countermeasures (e.g. skate stoppers, security), leads one to expect more skatepark or transition covers. I think this discrepancy comes from the fact that while many pros regularly skate at skateparks, when it comes time to "take care of business" (shoot photos or film) they turn to the streets.

Stairs!

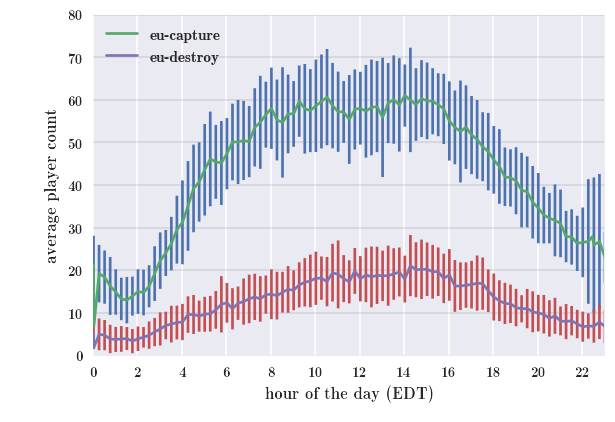

Figure 3 (direct link) shows the number of stairs on the cover over the years. I'm sure you're all wondering about that crazy looking outlier at around the 2008 mark. It lies a whopping 6 standard deviations above the mean! This point corresponds to the November 2007 cover, with Steve Nesser doing something like a 49 stair 50-50 (I couldn't get a perfect count). This will forever be known as the "Nesser Point" by skateboarding historians. You can see the 5050 here.

Figure 4 shows a zoomed in version of Figure 3 with the data broken into groups so you can see trends in the smallest and largest number of stairs each year (direct link). We can see a bit of a divergence between the highest and lowest stairs per year. I feel obligated to give this phenomena some silly name, so I'll call it the "Tech-Gnar Funnel". The lowest ones tend to increase, but at a slower rate than highest ones. This is because some skaters are still pushing technical limits on small (~8 stairs) rails and stairs, while others are testing the upper limit of board and body resilience by constantly upping the stair count (more about this in a later post, perhaps).

Conclusions

Thrasher Magazine has predominately run covers that feature street skating, and the percentage of these covers has increased over time playing a role in the "coverage-trend" feedback process. The average number of stairs featured on the cover has increased dramatically since the early 90s, giving rise to the Nesser point and the Tech-Gnar funnel.

I would really like to do a similar analysis with other magazines to get a better picture of overall trends, as well as compare between them. Unfortunately at this time no other magazine offers an online archive (that I know of - if I'm wrong about this please let me know!). Thrasher seems to have a reputation as being more transition oriented, so it would be neat to compare its covers to other major magazines and see if that's the case.

If you'd like to see a breakdown of this data in any other way, let me know, or if your are feeling ambitious - give it a shot yourself!

A Few Notable Covers

This guy started a revolution. He didn’t invent the ollie , but he some how figured out that you can take it from a vertical wall (which helps get you in the air) to a horizontal surface. We all take this trick for granted today, but I would have loved to have seen people’s reactions when this cover came out.

Natas. This cover was probably very confusing at the time. “How did he get up there?”

This is probably the first handrail cover. It’s not a “traditional” handrail in that it doesn’t go down any stairs and it isn’t very tall from the side approached, but it certainly is a railing for someone to put there hand on. And Ron Allen is still ripping!

This is the first serious handrail cover. Frankie Hill, of course.

Boring Details

Categorization

It’s difficult to chop a smooth continuum into discrete categories, and this project was no exception. Most of the covers were very easy because they obviously fell into a certain category. There were a couple slightly ambiguous cases though ( is this street or transition skating? It’s a street spot, but it’s not really a modern “street” trick). At the end of the day I had to make a subjective decision (“I know it when I see it” ). There were only a couple ambiguous cases, so they definitely don’t have a big impact on the larger trends.

Counting stairs

Skateboarders like to skateboard on lots of different types of stairs. This added some complications to the counting process. For example, a 3-flat-3-flat-3 triple set is clearly larger than a 9 stair, but how much larger? It depends. For that reason, double/triple/nthle sets were omitted, and the final count included only standard stair sets that were easily countable. I also omitted large block stairs (e.g. Barcelona’s four block) for the same reason. The number of these omissions is small, and including them in the data has barely any effect on the long term trends. (I would rather have fewer data points that were clearly defined than more data points that were sloppy!)

There is also the problem of actually counting the stairs. Most of the cases were easy, but once you get past 15 or so they become very tedious to count (thanks Steve Nesser). But, I'm confident that all the numbers are accurate to within a stair or two.

Data

The data are available here. Take a look, and if you disagree with some of my categorization decisions or stair counts, please let me know comments!